A Survey of Optimization in Deep Neural Network

Date:

This is my final project of Mathematical Principle of Machine Learning course.

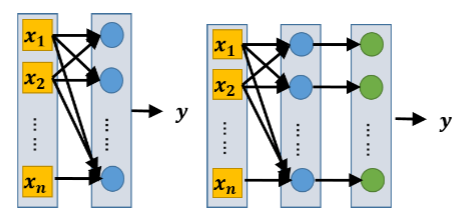

Nowadays, deep neural network (DNN) plays an important role in the development of many fields. To guarantee the performance of a DNN model, the convergence analysis is important since it indicates how the model reduces the value of loss function in the training process. We focus on the convergence analysis of classical DNN models. The scope of analysis involves why and how fast the model reduces the loss function. To guarantee the convergence rate, an important requirement is over-parameterization, which means that the network has sufficient number of parameters. Our analysis starts from the basic case to the general case of DNN models. By generalizing the concepts in the analysis of basic cases, we show that the convergence rate of a general DNN model can be guaranteed via over-parameterization.

Technical Report

Technical Report